AXCXEPT Inc. — Sapporo, Japan

AI Consulting &

LLM Engineering

22 years of enterprise IT. ¥50M+ delivered. 25+ open-source LLMs.

From infrastructure to AI — we build, ship, and prove it works.

RAGOps

Open-source RAG diagnostic engine.

Find what's broken. Fix it. Prove it worked.

"Your RAG is broken. We show you WHY and HOW TO FIX IT."

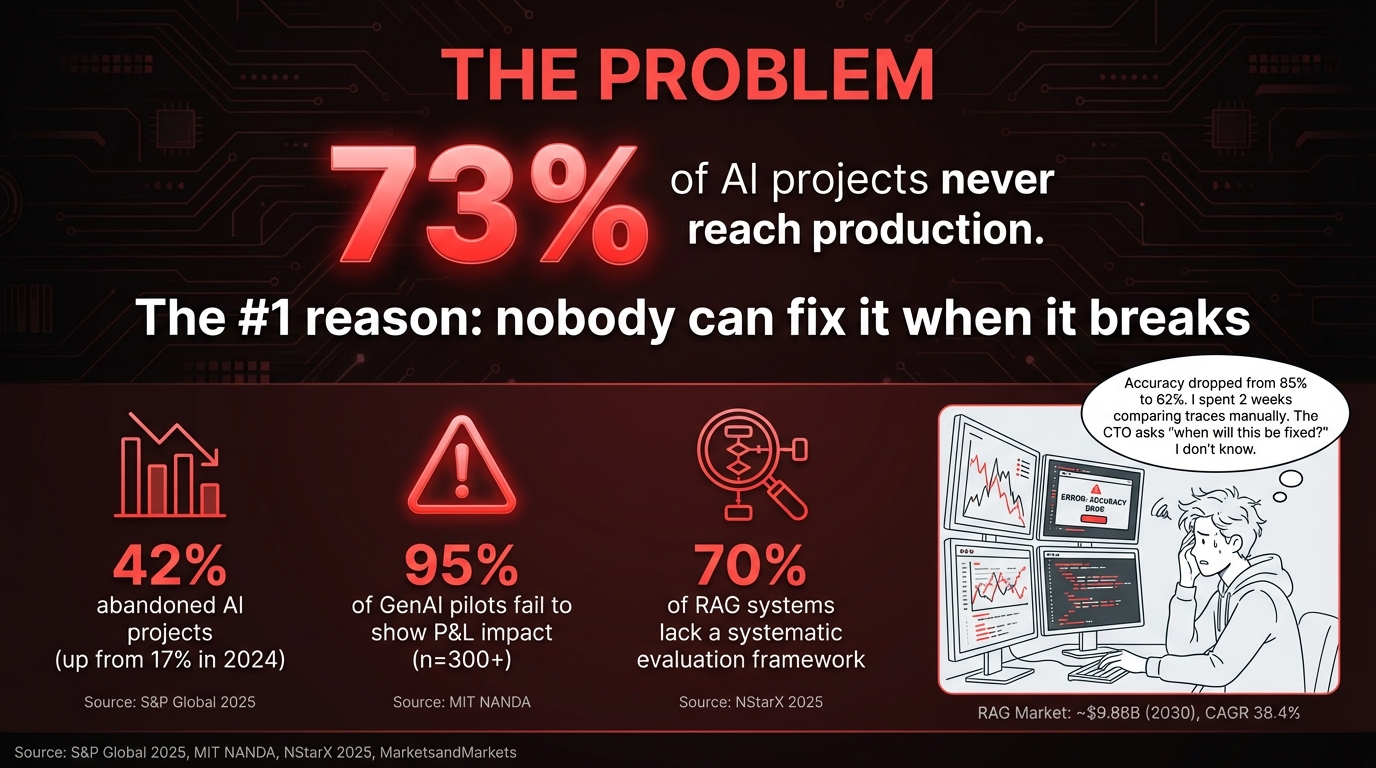

73% of AI projects never reach production

The #1 reason: nobody can fix it when it breaks.

Everyone shows THAT accuracy dropped.

Nobody shows WHY.

| "What happened?" | Metrics dashboard |

| "What's broken?" | ✗ You figure it out |

| "How to fix?" | ✗ You guess |

| "Did it work?" | ✗ Manual re-run |

| "What happened?" | Metrics dashboard |

| "What's broken?" | ✓ "These 26 chunks from index X" |

| "How to fix?" | ✓ "Re-chunk with 512 tokens, re-index" |

| "Did it work?" | ✓ Automated before/after proof |

3 Steps to Root Cause

From integration to proof-of-improvement in minutes.

Connect

3 lines of code. 2 minutes. Zero risk to your production pipeline.

from ragops import Tracer

tracer = Tracer(api_key="...")

chain = RetrievalQA(..., callbacks=[tracer])

Diagnose

Deterministic root cause analysis. Not LLM guessing.

26 chunks from 'policy_docs' have avg relevance 0.18. Appear in 142 of 167 queries but never contribute to correct answers.

Fix & Prove

Prescriptive fix suggestions with before/after quantified proof.

Context Precision improved. Root cause "Chunk Quality" resolved.

What RAGOps Does

Deterministic diagnosis. Not dashboard-only observability.

5 Evaluation Metrics

RAGAS-aligned, reference-free evaluation. Faithfulness, Context Precision, Answer Relevancy, Context Relevance, Response Groundedness. Scored 0.0–1.0 with color-coded health badges.

6 Root Cause Rules

Deterministic rule engine, not LLM guessing. Chunk Quality, Query-Chunk Mismatch, Index Freshness, Retrieval Distribution Anomaly, Permission Scope Leak, Reranking Inefficiency.

Prescriptions

Each root cause comes with verification tasks — not automatic fixes. AI suggests, human decides. Concrete steps to resolve the issue.

Before/After Proof

Quantified improvement evidence. Re-evaluate after fixing and see the score change. Prove the fix worked with data, not hope.

Built on Open Source

axcxept-eval: MIT license. 5 metrics. pip install.

axcxept-eval

Open-source RAG evaluation library. RAGAS-aligned 5-metric scoring. Use standalone or integrate with the RAGOps platform for full diagnosis.

- ✓ MIT License — free forever

- ✓ 5 evaluation metrics, reference-free

- ✓ pip install axcxept-eval

- ✓ Works with any LLM framework

$ pip install axcxept-eval # Evaluate your RAG traces from axcxept_eval import evaluate result = evaluate( query="What is the refund policy?", contexts=[chunk1, chunk2, chunk3], answer="The refund policy states..." ) # result.faithfulness → 0.91 🟢 # result.precision → 0.45 🔴 # result.relevancy → 0.78 🟡

Pricing

Start free. Scale when ready.

1 full diagnostic cycle. Connect → Diagnose → Prescribe → Re-evaluate. Experience the entire value loop.

Coming SoonUnlimited evaluations, drift alerts, prescriptions, API access, data export.

Coming SoonHITL workflow, regression tests, up to 10 members, full evaluation history.

Coming SoonEvidence Pack, SSO/RBAC, SLA, custom integrations, dedicated support.

Coming SoonServices & Products

22 years of enterprise IT. Cloud architecture, LLM development, and AI implementation.

EZO LLM Series

Lightweight, high-performance Japanese LLMs. MT-Bench 9.08 / J-MT-Bench 8.87 — GPT-4o class on 24GB VRAM. 25+ models on HuggingFace. OSS published.

Enterprise AI Solutions

End-to-end AI implementation: cloud architecture, custom LLM training, API integration, MLOps. We start from business problems, not models.

- ✓ Cloud infrastructure (Azure / AWS / GCP)

- ✓ Custom LLM fine-tuning & deployment

- ✓ On-premise / VPC isolation support

SaaS Products

AI-powered products built in-house, serving users globally.

- 💬 AI Kaiwa — AI English Conversation

- 🇯🇵 NihongoBuddy — AI Japanese Learning

- 📄 PDF2Sheet — PDF to Excel Conversion

Looking for enterprise AI consulting? We accept engagements for RAG optimization, LLM deployment, and cloud AI architecture.

Book a ConsultationNews

Released "Pseudo GRPO/PPO approach for low-cost Japanese LLM performance improvement"

Released ultra-compact LLM "QwQ-32B-Distill-Qwen-1.5B-Alpha" specialized for math reasoning

Panel discussion at ASIAWORLD-EXPO, Hong Kong (Jumpstarter)

Speaker at ALIBABA CLOUD TECH DAY TOKYO

About

| Company | AXCXEPT Inc. |

| CEO | Kazuya Hodatsu |

| Business | AI/LLM Consulting & Implementation, LLM Development (EZO Series), SaaS/App Development, RAGOps Platform |

| Location | Sapporo, Hokkaido, Japan |

Founder

Kazuya Hodatsu

CEO & Founder, AXCXEPT Inc.

22 years in enterprise IT — from infrastructure to AI. Built teams, shipped products, and led multi-million dollar projects at leading global technology companies.

Credentials

Track Record

Recognition

Research

"LLM engineering, cloud architecture, and enterprise delivery — I bring all three to every engagement."

Contact

Questions, partnerships, or just want to chat about RAG systems.